You know your song is great, but is it a hit? Will it inspire listeners to share it with their friends, hand over their email address, or maybe even open their wallets? You need feedback from average music fans who have nothing to lose by being honest.

SoundOut compares your song to 50,000 others from both major labels and indies. They promise to tell you how good your track is with guaranteed 95% accuracy (I’m still trying to wrap my brain around what that means). Starting at $40, they compile the results of 80 reviews into an easy-to-read PDF report. Top rated artists are considered for additional publishing and promotional opportunities.

The head of business development invited me to try out the service for free with three 24-hour “Express Reports” (a $150 value). I used the feedback from my Jango focus group to select the best and worst tracks I recorded for my last album, along with my personal favorite, an 8-minute progressive house epic. You can download all three of my PDF reports here.

Summary of Results

I can describe the results in one word: brutal. None of the songs are deemed worthy of being album tracks, much less singles. In the most important metric, Market Potential, my best song received a 54%, my worst 39%, and my favorite a pathetic 20%. Those numbers stand in stark contrast to my stats at Jango, for reasons I’ll explain in a bit.

Despite the huge swing in percentages, the track ratings only vary from 4.7 to 5.9, which implies Market Potential scores of 47% to 59%. For better or for worse, those scores are weighted using “computational forensic linguistic technology and other proprietary SoundOut techniques.” Even the track rating score is weighted! I would love to see a raw average of the 80 reviewers’ 0-10 point ratings, because I don’t trust the algorithms. The verbal smokescreen used to describe them doesn’t exactly inspire confidence (isn’t any numerical analysis “computational”?).

Perhaps to soften the blow, the bottom of the page lists three songs by well known artists in the same genre that have similar market potential. Translation: your songs suck, but so do these others by major label acts you look up to. Curiously, two of the same songs are listed on my 39% and 20% reports, which casts further doubt on the underlying algorithms.

Detailed Feedback

I found the Detailed Feedback page to be the most useful. It tells you who liked your song based on age group and gender. I don’t know exactly what “like” translates to on a 10-point scale, but it makes sense that 25-34 year-olds rate my retro 80’s song higher than 16-24 year-olds, since the former were actually around back then.

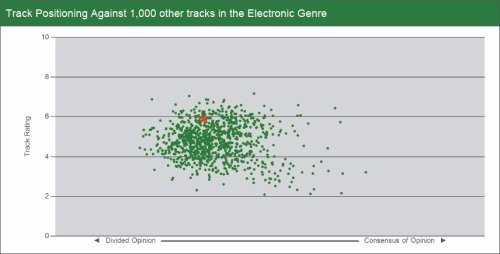

The track positioning chart maps your song relative to 1,000 others in the genre, based on rating and consensus of opinion. It’s a clean and intuitive representation of how your song stacks up to the competition. Still, it would be nice to know what criteria (if any) was used to select those 1,000 tracks.

Review Analysis

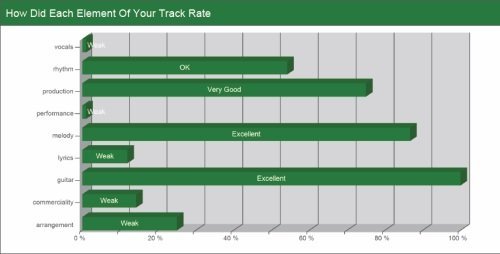

The Review Analysis section is utterly useless. The elements listed change from song to song. The only element that was consistently judged excellent is guitar, which is quite generous considering there’s no guitar in any of my songs.

The actual reviews are no better or worse than the comments on my Jango profile. They ranged from overly enthusiastic (“THIS SONG WAS GREAT I REALLY LIKED IT IT HAD A GOOD BEAT TO IT I MY HAVE TO DOWNLOAD IT MYSLEF”) to passive aggressive (“this song wasn’t as bad as it could be”). At the very least, the reviews prove there are real people behind the numbers.

Unfortunately for me, they don’t appear to be fans of electronic music. Not a single reviewer mentioned an electronic act. Instead of the usual comparisons to The Postal Service, Owl City, and Depeche Mode, I got Michael Jackson, Whitney Houston(!), and Alan Parsons Project.

Scouting for Fame and Fortune

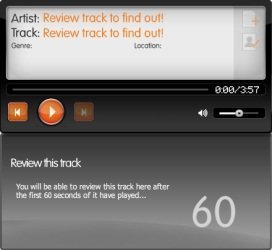

As puzzling as the mention of guitar in the review analysis was, it was a comment about my “20% song” that convinced me to review the review process. It said “the lack of vocals is a shame.” Those seven words reveal a key flaw in their methodology: reviewers only have to listen to the first 60 seconds of your song.

If you’re considering giving SoundOut a whirl, I highly recommend trying your hand as a scout on their sister site, Slicethepie. In just five minutes, you too can be one of the “real music fans and consumers” reviewing songs for SoundOut. You’ll start well below the minimum wage at $0.02 per review, but topperformers can level up to $0.20 a pop.

Hitting the play button starts the 60 second countdown until you can start typing your review. If you don’t come up with at least a couple quality sentences, it nags you to try harder. The elements in each track are not explicitly rated. Instead, the text of each review is analyzed, as evidenced by the scolding I received when one of my reviews was rejected:

“A review of the track would be good! You haven’t mentioned any of our expected musical terms - please try again…”

I didn’t appreciate the sarcasm after composing what I considered to be a very insightful review mentioning the production and drums - both of which are scored elements. This buggy behavior may explain my stellar air guitar scores. Perhaps my reviewers wrote “it would be NICE to hear some GUITAR” and the algorithm mistakenly connected the two words.

Even though I only selected electronic genres when I created my profile, I heard everything from mainstream rock/pop to hip hop, country, and metal. Reviewers are not matched to songs by genre. Everyone reviews everything, which opens us all up to Whitney Houston comparisons.

Conclusion

Can you tell if a song is great by listening to the first minute? No, but you can tell if it’s a hit.

If you operate in a niche genre, searching for your 1000 true fans, SoundOut may not be a good fit. For example, my best song doesn’t pay off until you hear the lyrical twist in the last chorus, and my “20% song” doesn’t have vocals for the first two minutes. With that in mind, how useful is a comprehensive analysis of the first 60 seconds? Less useful still when the data comes from reviewers who aren’t fluent in the genre.

While I have some reservations about their methodology, SoundOut is the fastest way I know of to get an unbiased opinion from a large sample of listeners. Use it wisely!

UPDATE: SoundOut posted a detailed response in the comments here.

Brian Hazard is a recording artist with sixteen years of experience promoting his eight Color Theory albums. His Passive Promotion blog emphasizes “set it and forget it” methods of music promotion. Brian is also the head mastering engineer and owner of Resonance Mastering in Huntington Beach, California.